High Performance Computing (HPC)

What is HPC?

The volume of data generated by healthcare systems, research, and clinical trials is growing at an exponential rate, far outpacing the computing power needed to process it. High-performance computing (HPC) is a powerful solution that enables the analysis of massive datasets and the execution of complex calculations at incredibly high speeds.

To answer this question we will borrow from the old saying: "Many hands make light work." With HPC, hundreds or thousands of nodes (individual computers) working together in clusters (teams) can solve these problems much faster than a large single, powerful computer could on its own. Clusters of interconnected computers can each work on a small piece of a problem simultaneously to solve a very large problem – no matter how complex.

Access to HPC resources is available through the Unified Learning Environment for Analytics & Data (ULEAD), a secure, Compliance-approved platform designed to protect sensitive data while delivering high-performance computing capabilities. With ULEAD, researchers and analysts can confidently leverage HPC resources while maintaining the highest standards of data security and regulatory compliance.

HPC Advantages

High-performance computing (HPC) brings a host of benefits, especially when running in a cloud environment. Here are some key advantages:

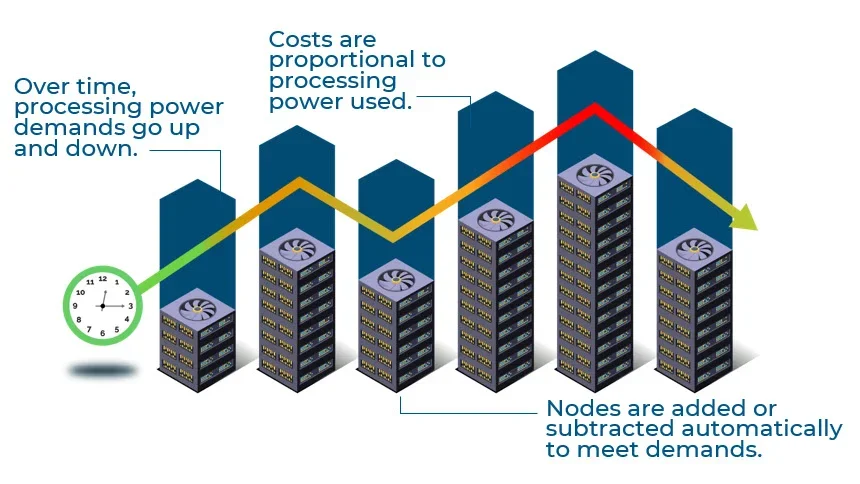

Auto-Scaling for Cost Efficiency: One of the most powerful features of HPC is its ability to auto-scale. This means that computing resources are dynamically adjusted to meet the demands of your workload. As your computational needs grow, more nodes (individual computers) are added automatically, and when the demand decreases, the system scales down by removing excess nodes. This ensures that you're only using and paying for the resources you need at any given time—avoiding the cost of maintaining expensive, underutilized servers.

Access to Cutting-Edge Technology: Running HPC in the cloud means that you’re always working with the latest, state-of-the-art computing hardware. You never need to worry about costly hardware upgrades or downtime due to aging infrastructure.

UCLA Health's HPC Capabilities

Customized Clusters: UCLA Health offers a flexible HPC cluster that supports compute-optimized, memory-optimized, AI/ML, and other specialized workloads.

Integration with Azure Services: The cluster integrates with Azure Data Lake Storage for scalable and efficient data management, both for frequently accessed (hot tier) and archived (cool tier) data.

Specialized Software: The cluster supports customized software like NVIDIA CUDA drivers and Illumina DRAGEN for secondary genomic analysis, with over 7,000 software packages available through Spack.

Specialized Hardware: Includes support for GPUs (NVIDIA T4, A100) and FPGAs (Xilinx U250), ideal for deep learning, AI training, large-scale simulations, and other high-demand computational tasks.

Technical Specifications for Current HPC Cluster

Development Work Partition (F2): Ideal for small-scale testing, development, and job submission, the F2 partition provides a lightweight, low-cost environment for early-stage software development, debugging, and workflow design.

- Resources: 2 CPU cores, 4 GB of memory, and 32 GB of scratch space per node.

- Key Features: The F2 partition allows users to test code and develop applications with minimal resources while keeping costs low. The 32 GB scratch space provides ample temporary storage for intermediate computations.

- Enhanced Development Tools: Users can persist their work using tmux, and access their nodes remotely via SSH from platforms like VSCode and Jupyter. This offers greater control and flexibility compared to command-line access alone.

Compute-Optimized Partitions (F16, F32, F64, F72): For large-scale simulations and compute-intensive tasks with varying CPU cores and memory.

- F16: 16 CPU cores, 32 GB of memory, and 512 GB of scratch space.

- F32: 32 CPU cores, 64 GB of memory, and 2000 GB of scratch space.

- F64: 64 CPU cores, 128 GB of memory, and 2000 GB of scratch space. These nodes are ideal for large-scale simulations, data analysis, and other compute-intensive applications.

- F72: 72 CPU cores, 144 GB of memory, and 2000 GB of scratch space. A single F72 node can be called to run a meta-pipeline to sequence genomics data, with many running in parallel.

GPU-Enabled Compute Partition: For AI and machine learning workloads, as well as high-end deep learning training, the GPU-enabled partitions bring immense computational power to handle these specialized tasks.

- NVIDIA T4 GPU Options

- NVIDIA A100 GPU Option

FPGA-Enabled Compute Partition: The cluster also supports FPGA-enabled nodes from the NP series. These nodes are equipped with Xilinx U250 FPGAs, providing high-throughput, low-latency, and customizable processing capabilities for specialized workloads that benefit from parallel processing and reconfigurable logic.

Azure Data Lake Storage: The integration of Azure Data Lake Storage in both hot and cool tiers provides robust and scalable shared storage solutions. The hot tier is suitable for frequently accessed data, offering low latency and high throughput, essential for real-time data processing tasks. The cool tier is cost-effective for storing infrequently accessed data, maximizing cost effectiveness for infrequently accessed data. An archive tier is also available for ultra-low cost long-term offline storage.

Lustre Parallel File System (Coming Soon): AMLFS Lustre provides a high-performance parallel file system for workloads requiring high throughput

and low latency. It is seamlessly integrated with the HPC cluster, making it ideal for large-scale simulations and data processing tasks.

HPC Component and Overhead Cost

As the largest UC consumer of Azure services, UCLA Health IT has a strong partnership with Microsoft and AWS that allows us to directly negotiate discounts across the entire Health IT organization. As an academic medical institution, our negotiated discounts for higher education are among the most competitive for cloud customers and reflect UC-wide discounts on all services in addition to UCLAH-specific deep discounts on our most utilized services, primarily around high-performance computing workloads and data storage. Customers can also reserve capacity in the data center for three-year terms and lock in the deepest discounts offered.

What do each of the components cost? These items can be separated into shared and individual resources.

Shared Resources:

- Scheduler

- Orchestrator

- Storage of shared data sets

- Networking and ancillary

Individual Resources:

- Development environment

- Compute Nodes

- Storage of private/personal data sets

Running an HPC cluster requires that resources be running 24/7 because:

- To support user logins at any time of day

- To be ready to take any number of new jobs, large or small

- To support all jobs running on the cluster for a series of days or weeks

- Maintaining active monitoring diagnostics, threat detection, security posture, audit logging, etc.

Estimated Monthly Overhead Costs

Small Cluster (1-5 users): ~$1,800/month

Medium Cluster (6-10 users): ~$3,500/month

Large Cluster (>10 users): Contact OHIA for consultation

Test Cluster (<11 users): ~$1,500/month

HPC Cost Estimates

| VM Compute Type | Node Name | Azure SKU Name | CPU | Memory | Accelerator Memory | Pay as you go - ($) Monthly | 1 Yr. Reserved Instance - ($) Monthly | 3 Yr. Reserved Instance - ($) Monthly |

|---|---|---|---|---|---|---|---|---|

| Developer | Dev Node | F2s v2 | 2 vCPUs | 4 GB RAM | N/A | $34.27 | - | $25.41 |

| Compute Optimized | Interactive Compute Node | F16s v2 | 16 vCPUs | 32 GB RAM | N/A | $313.34 | $242.34 | |

| Compute Optimized | Large Compute Node | F32s v2 | 32 vCPUs | 64 GB RAM | N/A | $569.62 | $428.01 | |

| Compute Optimized | Double Large Compute Node | F64s v2 | 64 vCPUs | 128 GB RAM | N/A | $1,248.45 | $965.00 | |

| Compute Optimized | Heavy Compute Node | F72s v2 | 72 vCPUs | 144 GB RAM | N/A | $1,374.66 | $1,055.76 | |

| GPU | AI/ML GPU (A100) | NC24ads A100 | 24 vCPUs | 220 GB RAM | 80 GB HBM2e | $2,414.55 | $1,752.72 | $996.42 |

| GPU | AI/ML Double GPU (2 x A100) | NC48ads A100 | 48 vCPUs | 440 GB RAM | 160 GB HMB2e | $4,827.71 | $3,505.50 | $3,981.50 |

| GPU | AI/ML Quad GPU (4 x A100) | NC96ads A100 | 96 vCPUs | 880 GB RAM | 320 GB HBM2e | $9,654.03 | $7,011.00 | $3,981.50 |

| Memory Optimized | Ultra RAM Node | M64s | 64 vCPUs | 1024 GB RAM | N/A | $2,675.19 | $1,599.30 |

Support

UCLA Health’s Office of Health Informatics and Analytics (OHIA) can help set up and manage a high performance computing (HPC) environment for you.

We’d love to hear about your data and computing challenges and how we can help you overcome them. Please feel free to contact OHIA’s High Performance Computing Team to set up a consultation today!

Support Email: OHIAHPCSupport@mednet.ucla.edu